Recommanded Videos

Good Companies Need More Software Engineers #github #layoffs

2025. 7. 6.

Natural Language Processing using RUST | NLP | Text Processing | cpu | CUDA | Sentiments

2024. 10. 4.

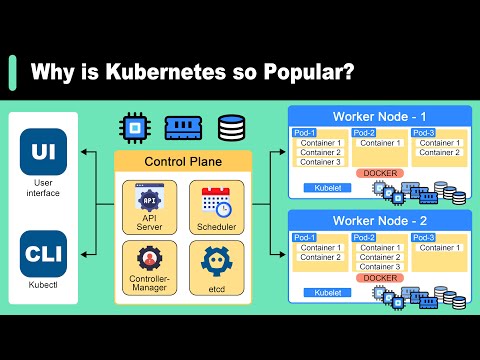

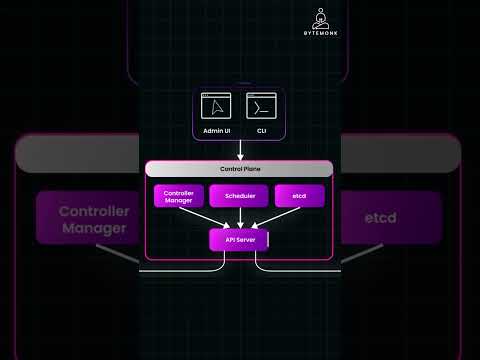

Why is Kubernetes Popular | What is Kubernetes?

2024. 10. 8.

![스프링캠프 2024 [Track 1] 3.왜 나는 테스트를 작성하기 싫을까? (조성아)](https://i1.ytimg.com/vi/pCE7ibRCZEI/hqdefault.jpg)

스프링캠프 2024 [Track 1] 3.왜 나는 테스트를 작성하기 싫을까? (조성아)

2024. 8. 30.

Kubernetes Explained: Pods & Control Plane

2024. 11. 2.

Claude Subagents are Absolutely Insane

2025. 12. 24.